The Critical Role of AI Governance: Crafting a Framework for Ethical, Responsible, and Transparent AI Innovation

InfoTech Research Group

In today’s world, Artificial Intelligence (AI) is becoming increasingly integral to various industries, ranging from healthcare to finance, entertainment to transportation. With its growing influence, it is crucial to ensure that AI systems are developed, deployed, and maintained responsibly and ethically. This is where AI governance comes into play.

AI governance refers to the set of rules, policies, ethical guidelines, and procedures designed to ensure that AI systems are used in a way that aligns with societal values, legal standards, and human rights. It is essential for maintaining trust and accountability while ensuring that AI technologies are used to benefit society without causing harm. In this article, we will explore the key principles, best practices, and frameworks of AI governance in an easy-to-understand way.

Key Principles of AI Governance

AI governance is underpinned by several key principles that aim to ensure that AI technologies are used ethically and responsibly. These principles help guide the development and use of AI systems across different industries.

1. Transparency

Transparency in AI governance means that AI systems should be clear about how they work. This includes explaining how data is collected, how algorithms make decisions, and the factors that influence those decisions. Transparency helps build trust, as users and stakeholders can better understand the AI’s decision-making process, making it easier to identify potential issues like bias or unfair practices. For example, if an AI system is used to approve loans, transparency ensures that the criteria for approval are understandable and fair to all applicants.

2. Fairness

AI systems must be designed to treat everyone impartially, without discriminating based on race, gender, age, or other personal characteristics. This principle addresses the risk of bias in AI models, which can lead to unfair outcomes. For instance, an AI model used in hiring should not favor one gender or ethnicity over others. To ensure fairness, AI developers need to carefully examine the data they use to train these models and make necessary adjustments to avoid biased decision-making.

3. Accountability

AI systems should have clear lines of accountability. This means that there should be individuals or organizations responsible for the actions and outcomes of an AI system. If an AI system causes harm or makes a wrong decision, it is important to know who is responsible and what steps will be taken to address the issue. Accountability helps ensure that the people behind AI technologies are held responsible for their impact on society, whether positive or negative.

4. Human-Centric Design

AI systems should prioritize human well-being and align with human values. Rather than replacing humans, AI should work alongside people to improve lives, enhance productivity, and solve problems. Human-centric design ensures that AI technology supports, rather than undermines, social, ethical, and cultural values. For instance, AI in healthcare should prioritize patient care and safety, while in transportation, it should focus on reducing accidents and improving efficiency.

5. Privacy

Respecting individual privacy is a cornerstone of AI governance. AI systems often collect large amounts of data, and ensuring that this data is handled responsibly is crucial. Privacy protection involves adhering to regulations like the General Data Protection Regulation (GDPR) and implementing strong safeguards to prevent unauthorized access or misuse of personal information. It is important for AI developers to be transparent about how data is collected and to give individuals control over their data.

6. Safety and Security

AI systems must be safe from malicious use and unintended consequences. Security measures must be in place to prevent hackers from exploiting AI systems and causing harm. Additionally, safety mechanisms should be implemented to minimize risks, such as ensuring self-driving cars are programmed to avoid accidents or designing medical AI tools that don’t misdiagnose patients.

Best Practices for AI Governance

Adopting AI governance principles requires following certain best practices that help ensure AI systems are developed and used in an ethical manner.

1. Manage Data Quality

The quality of data used to train AI models is critical to their success. Ensuring that the data is accurate, unbiased, and representative of the population it will serve is essential for producing reliable results. Developers should continuously monitor and update data to maintain its relevance and quality. For example, training an AI model to recognize faces should involve a diverse dataset to avoid bias towards certain ethnic groups.

2. Implement Strong Data Security and Privacy Standards

Since AI systems rely heavily on data, ensuring that this data is secure and private is fundamental. Organizations should implement stringent data protection measures, including encryption, anonymization, and secure access protocols, to protect against breaches and misuse. Adhering to privacy laws and ensuring data collection is transparent are also critical practices.

3. Engage Stakeholders

Promoting transparency, accountability, and ethical considerations in AI development requires collaboration with a wide range of stakeholders. This includes engaging with experts in ethics, law, and technology, as well as with the communities that may be affected by AI systems. Engaging stakeholders ensures that AI systems reflect diverse perspectives and societal needs, fostering trust and encouraging responsible innovation.

4. Comply with Relevant Regulations

AI developers and organizations must comply with the laws, regulations, and standards that govern AI use in different regions. These rules are in place to protect users’ rights and ensure AI systems are used safely. Compliance with regional AI regulations, such as the European Union’s AI Act or the United States’ Algorithmic Accountability Act, helps organizations avoid legal risks and ensures that they meet the ethical standards set by society.

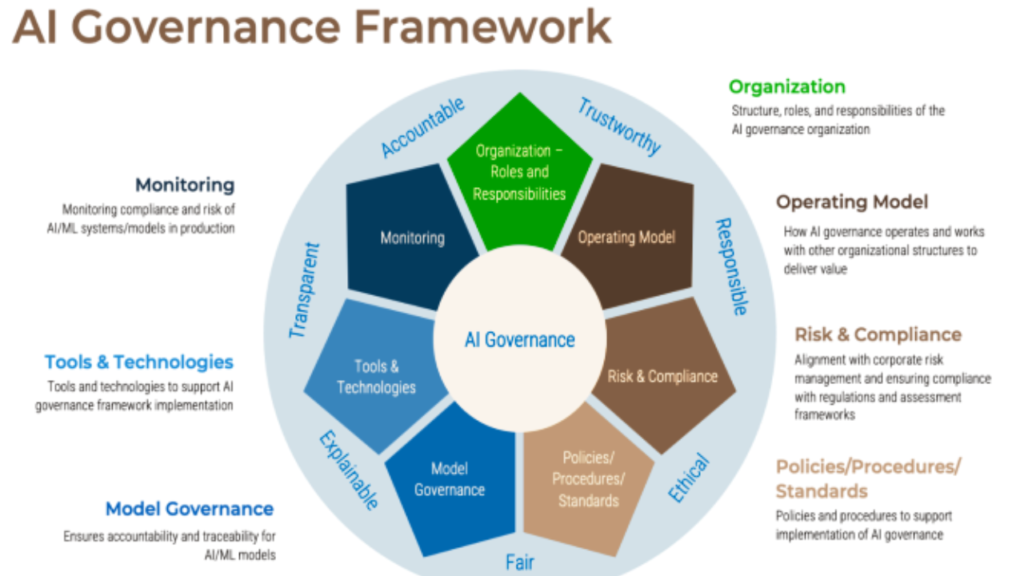

AI Governance Frameworks

Several AI governance frameworks have been proposed to help organizations manage the ethical use of AI. One such framework is the AIGA (Artificial Intelligence Governance and Auditing) framework, developed by researchers at the University of Turku in Finland. This framework is designed to help organizations assess and audit their AI systems to ensure they meet governance standards. The AIGA framework encourages organizations to consider ethical principles, transparency, accountability, and fairness in their AI systems.

Another widely discussed framework is the Hourglass Model, which illustrates how AI governance flows from broader social environments (such as cultural norms, societal values, and legal standards) into specific organizational policies and procedures. The Hourglass Model emphasizes the importance of aligning AI governance with societal expectations while ensuring that organizations are accountable for their AI systems’ actions.

As AI continues to shape our world, it is crucial to have a robust governance framework in place to guide its development and use. By adhering to key principles such as transparency, fairness, accountability, human-centric design, privacy, and safety, we can ensure that AI benefits society while minimizing its risks. Following best practices such as managing data quality, engaging stakeholders, and complying with regulations further strengthens the responsible use of AI.

Governance frameworks like AIGA and the Hourglass Model provide valuable tools for organizations to assess and improve their AI practices. Ultimately, effective AI governance will not only protect individuals and society but will also help foster innovation in a way that is ethical, responsible, and beneficial to all

- Earn Passive Income: Build a Baseball eBook for Families - August 4, 2025

- Turn Your First $1K Baseball Hustle Cash Into Wealth - August 4, 2025

- $10K/Month Baseball Academy? Setup & Revenue Blueprint - August 1, 2025